static string GetCachedData(string key, DateTimeOffset offset)

{

Lazy lazyObject = new Lazy(() => SomeHeavyAndExpensiveCalculationThatReturnsAString());

var available = MemoryCache.Default.AddOrGetExisting(key, lazyObject, offset);

if (available == null)

return lazyObject.Value;

else

return ((Lazy)available).Value;

}

It relies on the MemoryCache::AddOrGetExisting returning null on the very first call that adds the item to the cache but returning the cached item in case it already exists in the cache.

A clever trick worth of remembering.

Wednesday, November 22, 2017

Useful caching pattern

Monday, November 13, 2017

Node.js, circularly dependent modules and intellisense in VS Code

// a.js

var B = require('./b');

class A {

createB() {

return new B();

}

}

module.exports = A;

// b.js

var A = require('./a');

class B {

createA() {

return new A();

}

}

module.exports = B;

// app.js

var A = require('./a');

var B = require('./b');

var a = new B().createA();

var b = new A().createB();

console.log(a);

console.log(b);

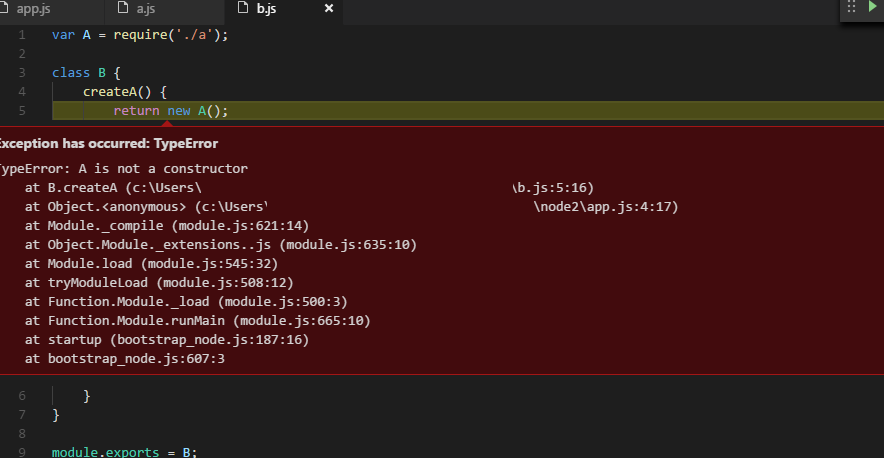

Although this looks correct and intellisense works correctly, this of course doesn't work because of the circular dependency.

And while there are multiple resources

that cover the topic (e.g. [1] or [2]) I'd like to emphasize that VS Code does a nice job in inferring of what actual type of the returned value from the method is, regardless of what approach to the issue you take.

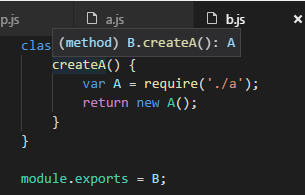

Let's check the first approach, where you inline the reference

// b.js

class B {

createA() {

var A = require('./a');

return new A();

}

}

module.exports = B;

Note that the VS Code still correctly infers the return type of the method:

The second approach involves moving requires to the end of both modules

// a.js

class A {

createB() {

return new B();

}

}

module.exports = A;

var B = require('./b');

// b.js

class B {

createA() {

return new A();

}

}

module.exports = B;

var A = require('./a');

The type inference still works.

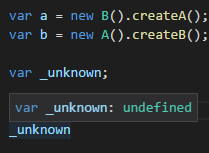

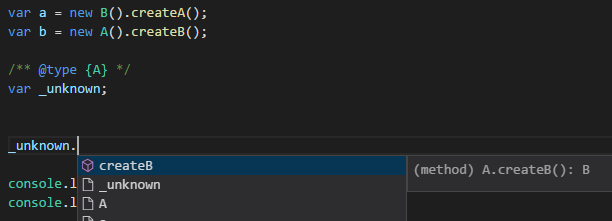

The last fact worth mentioning is that VS Code respects the JSDoc's @type annotation, whenever there type can't be inferred automatically, you can help it.

Take a look at VS Code not able to infer the actual type of a variable

var a = new B().createA(); var b = new A().createB(); var _unknown;and how a hint helps it

var a = new B().createA();

var b = new A().createB();

/** @type {A} */

var _unknown;

Monday, October 9, 2017

Javascript Puzzle No.2 (beginner)

Is it really so? This little snippet shows that Object.keys doesn't really enumerate keys but rather ... values.

var p = {

foo : 0,

bar : 1

};

for ( var e in p ) {

console.log( e );

}

for ( var e in Object.keys( p ) ) {

console.log( e );

}

The snippet prints

foo bar 0 1while it definitely should print

foo bar foo barWhat's wrong here?

Thursday, October 5, 2017

JWT tokens between .NET and node.js

var jwt = require('jsonwebtoken');

var secret = "This is my shared, not so secret, secret!";

var payload = {

"unique_name":"myemail@myprovider.com",

"role":"Administrator",

"nbf":1499944099,

"exp":1599947699,

"iat":1499944099,

"iss":"http://my.tokenissuer.com",

"aud":"http://my.website.com"};

var token = jwt.sign(payload, secret);

console.log( token );

// -------------------------

var decoded = jwt.verify(token, secret);

console.log( decoded.unique_name );

thanks to the jsonwebtoken package.

In case of .NET, things are a little bit more complicated as the JWT is implemented in System.IdentityModel.Tokens.Jwt. This is unfortunate, as the 5.x.x version of this library has been reimplemented to rely on Microsoft.IdentityModel. Because of this, many older tutorials became deprecated and people tend to have different issues.

To have a working code, reference System.IdentityModel.Tokens.Jwt 5.1.4 or newer from Nuget and then

using Microsoft.IdentityModel.Tokens;

using System;

using System.Collections.Generic;

using System.IdentityModel.Tokens.Jwt;

using System.Security.Claims;

using System.Text;

namespace ConsoleApplication

{

class Program

{

static void Main(string[] args)

{

var plainTextSecurityKey = "This is my shared, not so secret, secret!";

var signingKey = new Microsoft.IdentityModel.Tokens.SymmetricSecurityKey(

Encoding.UTF8.GetBytes(plainTextSecurityKey));

var signingCredentials = new Microsoft.IdentityModel.Tokens.SigningCredentials(signingKey,

Microsoft.IdentityModel.Tokens.SecurityAlgorithms.HmacSha256Signature);

// -------------------------

var claimsIdentity = new ClaimsIdentity(new List()

{

new Claim(ClaimTypes.Name, "myemail@myprovider.com"),

new Claim(ClaimTypes.Role, "Administrator"),

}, "Custom");

var securityTokenDescriptor = new Microsoft.IdentityModel.Tokens.SecurityTokenDescriptor()

{

Audience = "http://my.website.com",

Issuer = "http://my.tokenissuer.com",

Subject = claimsIdentity,

SigningCredentials = signingCredentials

};

var tokenHandler = new JwtSecurityTokenHandler();

var plainToken = tokenHandler.CreateToken(securityTokenDescriptor);

var signedAndEncodedToken = tokenHandler.WriteToken(plainToken);

Console.WriteLine(plainToken);

Console.WriteLine(signedAndEncodedToken);

// -------------------------

var tokenValidationParameters = new TokenValidationParameters()

{

ValidAudiences = new string[]

{

"http://my.website.com",

"http://my.otherwebsite.com"

},

ValidIssuers = new string[]

{

"http://my.tokenissuer.com",

"http://my.othertokenissuer.com"

},

IssuerSigningKey = signingKey

};

Microsoft.IdentityModel.Tokens.SecurityToken validatedToken;

var validatedPrincipal = tokenHandler.ValidateToken(signedAndEncodedToken,

tokenValidationParameters, out validatedToken);

Console.WriteLine(validatedPrincipal.Identity.Name);

Console.WriteLine(validatedToken.ToString());

Console.ReadLine();

}

}

}

Thursday, September 28, 2017

Javascript Puzzle No.1 (beginner)

function* foo()

{

var n = 0;

while ( true ) {

yield n++;

}

}

This seemingly creates an infinite enumeration of 0, 1, 2, etc. However, a simple test

for ( var i=0; i<10; i++ )

{

console.log( foo().next().value );

}

reveals it doesn't really do what it's supposed to

0 0 0 0 0 0 0 0 0 0The question here is to explain the incorrect behavior of the generator.

Monday, September 11, 2017

ADFS and the SAML2 Artifact Binding woes

We've been using the WS-Fed for ages, I myself wrote a couple of tutorials and was often emailed by people asking for some details. As time passes, the Ws-Fed is pushed away in favor of the SAML2 protocol. And yes, SAML2 has some significant advantages over Ws-Fed, namely it supports multiple bindings.

The idea behind bindings is to allow both the token request and the actual token response be transmitted in various ways. Specifically, if you have ever worked with the WS-Fed, you are aware that the request from the Relying Party to the Identity Provider is passed over the browser's query string

https://idp.com?wa=wsignin1.0&wtrealm=https://rp.comand the response is always passed in a HTML form that is POSTed to the Relying Party. On the other hand, the OAuth2/OpenID Connect have a different way of passing messages - while the request is also passed over the browser's query string

https://idp.com?wa=response_type=code&client_id=foobar&redirect_uri=https://rp.com&scope=profileit is the RP that creates a direct HTTPs request to the IdP's profile endpoint to retrieve the user token.

This subtle difference has substantial consequences. In particular:

- since the WS-Fed SAML1.1 token is passed from the IdP to the RP using the client's browser, it has to be signed to prevent it from being tampered. Also, large tokens could possibly introduce unwanted lag issues as it unfortunately takes some time to POST large data from a browser to a webserver

- the OAuth2 tokens don't have to be signed as the trust is established by direct requests from the RP to the IdP, which, on the other hand could possibly be one of attack vectors (suppose the DNS can be poisoned at the RP's side and direct requests from the RP to the IdP target an attacker's site instead of the IdP

These two examples show that there are three different ways of passing the data between the RP and the IdP and this is exactly what three most important SAML2 binding are about

- the HTTP-Redirect binding consists in a 302 redirect and passing data in the query string

- the HTTP-POST binding consists of a HTTP POSTed form where the data is included in the form's inputs

- the Artifact binding consists in a redirect (302 or POST) where only a single artifact is returned, the receiver creates a HTTPs request passing the artifact as an argument and gets the data as the response

For example, the RP can use the HTTP-Redirect binding to initiate the flow and then the IdP can use the HTTP-POST binding to send the token back (this particular combination of bindings would make such SAML2 negotiation very similar to WS-Fed):

Or, the RP can use the HTTP-Redirect binding to send the request to the IdP but can get the response back with the Artifact binding (which would make it look similar to OAuth2):

What this blog entry is about is how difficult it was to implement the SAML2's Artifact binding in a scenario where the ADFS is the actual Identity Provider.

First, why would you implement the SAML2 for yourself? Well, few reasons:

- practical - the SAML2 is not supported by the base class library, although SAML1.1 (Ws-Fed) is. There are some classes in the base class library (the SAML2 token, the token handler) but the SAML2 protocol classes (handling different bindings) are missing. It's just like somebody has started the work but was interrupted in the middle of it and was never allowed to return and finish the job. There are some free and paid implementations (look here, or here, or here and google paid libraries on your own)

- practical - although some SAML2 implementations can be found, it looks like this particular binding, the Artifact binding is not always supported, even though the other two bindings usually are supported

- theoretical - to better understand all the details and have a full control over what happends under the hood of the Artifact binding

The implementation turned out to be more or less straightforward as long as HTTP-Redirect and HTTP-POST bindings are considered. It turned out, however, that the Artifact binding is a malicious strain and just doesn't want to cooperate that easily. Problems started when the ADFS was expected to return the artifact that the Artifact Resolve endpoint at the ADFS's side was about to be queried so the artifact could be exchanged for a SAML2 token. A following list enumerates some possible issues and could be used as a checklist not only by implementors but also by administrators who configure a channel between a Relying Party and the ADFS based on the Artifact binding.

- make sure you read the SAML 2.0 ADFS guide from Microsoft

- make sure the Artifact Resolve endpoint is turned on in the ADFS configuration

- make sure the signing certificate is imported in the Relying Party Trust Properties on the Signing tab - the ArtifactResolve request has to be signed by the RP's certificate, otherwise ADFS will reject the request (actually, ADFS tends to return an empty ArtifactResponse, with StatusCode equal to urn:oasis:names:tc:SAML:2.0:status:Success but no token included

- make sure you set correct signature algorithm in the Properties on the Advanced tab - by default ADFS expects the ArtifactResolve will be signed using SHA256RSA, however, your certificate can be SHA1RSA one

- make sure you consult the Event Log entries (under Applications and Services Logs/AD FS 2.0/Admin) to find detailed information on request processing. What you will get at the RP's side from sending invalid request

is just urn:oasis:names:tc:SAML:2.0:status:Requester (which means that your request is incorrect for some reason) or urn:oasis:names:tc:SAML:2.0:status:Responder (which means ADFS is misconfigured). On the other hand, peeking into the system log can sometimes reveal additional details, e.g.

- make sure you sign the ArtifactResolve request and then you put it into a SOAP Envelope. Make sure you don't sign the whole envelope. This is how a correct request should look like

<s:Envelope xmlns:s=\"http://schemas.xmlsoap.org/soap/envelope/\"> <s:Body> <samlp:ArtifactResolve xmlns:samlp="urn:oasis:names:tc:SAML:2.0:protocol" xmlns:saml="urn:oasis:names:tc:SAML:2.0:assertion" ID="_cce4ee769ed970b501d680f697989d14" Version="2.0" IssueInstant="current-date-here e.g. 2017-09-11T12:00:00"> <saml:Issuer>the ID of the RP from ADFS config e.g. https://rp.com</saml:Issuer> <ds:Signature xmlns:ds="http://www.w3.org/2000/09/xmldsig#">...</ds:Signature> <samlp:Artifact>the artifact goes here</samlp:Artifact> </samlp:ArtifactResolve> </s:Body> </s:Envelope> - (this one was tricky)make sure the Signature goes between the Issuer and Artifact nodes. By taking a look into ADFS sources (the Microsoft.IdentityServer.dll) you could learn that ADFS has its own exclusive, hand-written algorithm of signature validation which doesn't use the .NET's SignedXml class at all. This manually coded algorithm expects this particular order of nodes and logs 'Element' is an invalid XmlNodeType (as shown at the screenshot above, not too helpful) if you put your signature as the very last node. Frankly, I don't see any sense in this limitation at all.

- make sure you set SignedInfo's CanonicalizationMethod to SignedXml.XmlDsigExcC14NTransformUrl (which resolves to http://www.w3.org/2001/10/xml-exc-c14n#) (for some reason the ADFS just doesn't accept the default SignedXml.XmlDsigC14NTransformUrl aka http://www.w3.org/TR/2001/REC-xml-c14n-20010315)

- (this was even tricker) make sure you add XmlDsigExcC14NTransform to the Reference element of the signature. The relevant part of the ArtifactResolve code is presented below. It expects the ArtifactResolve XML in a string form, the signing certificate and the id of the ArtifactResolve (to put it into the signature)

public string SignArtifactResolve(string artifactResolveBody, X509Certificate2 certificate, string id) { if (artifactResolveBody == null || certificate == null || string.IsNullOrEmpty( id )) throw new ArgumentNullException(); XmlDocument doc = new XmlDocument(); doc.LoadXml(artifactResolveBody); SignedXml signedXml = new SignedXml(doc); signedXml.SigningKey = certificate.PrivateKey; signedXml.SignedInfo.CanonicalizationMethod = SignedXml.XmlDsigExcC14NTransformUrl; //"http://www.w3.org/2001/10/xml-exc-c14n#"; // Create a reference to be signed. Reference reference = new Reference(); reference.Uri = id; // Add an enveloped transformation to the reference. XmlDsigEnvelopedSignatureTransform env = new XmlDsigEnvelopedSignatureTransform(true); reference.AddTransform(env); XmlDsigExcC14NTransform c14n = new XmlDsigExcC14NTransform(); c14n.Algorithm = SignedXml.XmlDsigExcC14NTransformUrl; reference.AddTransform(c14n); // Add the reference to the SignedXml object. signedXml.AddReference(reference); // Compute the signature. signedXml.ComputeSignature(); // Get the XML representation of the signature and save // it to an XmlElement object. XmlElement xmlDigitalSignature = signedXml.GetXml(); // the signature does directly between Issuer and Artifact doc.DocumentElement.AppendChild(doc.ImportNode(xmlDigitalSignature, true)); //var artifactChild = doc.DocumentElement.ChildNodes[doc.DocumentElement.ChildNodes.Count-1]; //doc.DocumentElement.InsertBefore(doc.ImportNode(xmlDigitalSignature, true), artifactChild); // soap enveloped return CreateSoapEnvelope( doc.OuterXml ); } private string CreateSoapEnvelope( string soapBodyContent ) { if (string.IsNullOrEmpty(soapBodyContent)) throw new ArgumentNullException(); return string.Format("<?xml version=\"1.0\" encoding=\"utf-8\"?><s:Envelope xmlns:s=\"http://schemas.xmlsoap.org/soap/envelope/\"><s:Body>{0}</s:Body></s:Envelope>", soapBodyContent ); } - (this was slightly tricky) when sending the SOAP Envelope with the ArtifactResolve to ADFS, make sure you add the SOAPAction header set to http://www.oasis-open.org/committees/security. Also make sure you set the Content-Type to text/xml

A correct ArtifactResolve request makes ADFS return the ArtifactResponse document which looks more or less like

<s:Envelope xmlns:s=\"http://schemas.xmlsoap.org/soap/envelope/\">

<s:Body>

<samlp:ArtifactResponse ID=\"_d9f59a16-8189-4095-9f57-1033439cb1f3\" Version=\"2.0\" IssueInstant=\"2017-09-11T10:20:49.527Z\"

Consent=\"urn:oasis:names:tc:SAML:2.0:consent:unspecified\" InResponseTo=\"_id\" xmlns:samlp=\"urn:oasis:names:tc:SAML:2.0:protocol\">

<Issuer xmlns=\"urn:oasis:names:tc:SAML:2.0:assertion\">http://adfs.local/adfs/services/trust</Issuer>

<samlp:Status>

<samlp:StatusCode Value=\"urn:oasis:names:tc:SAML:2.0:status:Success\" />

</samlp:Status>

<samlp:Response ID=\"_e0d8b313-ba58-4325-a9af-39a406a73bc8\" Version=\"2.0\" IssueInstant=\"2017-09-11T10:20:49.277Z\" Destination=\"https://rp.com\"

Consent=\"urn:oasis:names:tc:SAML:2.0:consent:unspecified\" InResponseTo=\"guid_2e0575b5-37b5-4546-a209-1bbc725c6532\">

<Issuer xmlns=\"urn:oasis:names:tc:SAML:2.0:assertion\">http://adfs.local/adfs/services/trust</Issuer>

<samlp:Status>

<samlp:StatusCode Value=\"urn:oasis:names:tc:SAML:2.0:status:Success\" />

</samlp:Status>

<Assertion ID=\"_04f54457-70ce-4850-a639-c068a0f801db\" IssueInstant=\"2017-09-11T10:20:49.277Z\" ...>

<Issuer>http://adfs.local/adfs/services/trust</Issuer>

<ds:Signature xmlns:ds=\"http://www.w3.org/2000/09/xmldsig#\">...</ds:Signature>

<Subject>...</Subject>

<Conditions">...</Conditions>

<AttributeStatement>

<Attribute Name=\"http://schemas.xmlsoap.org/ws/2005/05/identity/claims/name\">

<AttributeValue>foo

</Attribute>

<Attribute Name=\"http://schemas.xmlsoap.org/ws/2005/05/identity/claims/surname\">

<AttributeValue>Foo

</Attribute>

<Attribute Name=\"http://schemas.xmlsoap.org/ws/2005/05/identity/claims/givenname\">

<AttributeValue>Bar

</Attribute>

</AttributeStatement>

<AuthnStatement AuthnInstant=\"2017-09-11T10:20:49.199Z\">

<AuthnContext>

<AuthnContextClassRef>urn:oasis:names:tc:SAML:2.0:ac:classes:PasswordProtectedTransport</AuthnContextClassRef>

</AuthnContext>

</AuthnStatement>

</Assertion>

</samlp:Response>

</samlp:ArtifactResponse>

</s:Body>

</s:Envelope>

The response has to be extracted from the SOAP Envelope and verified (assertions are signed). Then, it can be passed down the pipeline as if it comes from any other binding.

Tuesday, August 29, 2017

Debugging Javascript Webdriver IO in Visual Studio Code revisited

- create a new folder under VS

- npm init

- npm install webdriverio

- .\node_modules\.bin\wdio config, accept default answers but remember to check selenium-standalone when asked about which services should be added

- the .\wdio.conf.js file is created

- create an example test method in a file .\test\test1/js (the example code is taken from the WebdriverIO page)

var assert = require('assert'); describe('Top level', function() { it( 'Unit test function definition', function() { browser.url('https://duckduckgo.com/'); browser.setValue('#search_form_input_homepage', 'WebdriverIO'); browser.click('#search_button_homepage'); var title = browser.getTitle(); assert.equal(title, 'WebdriverIO na DuckDuckGo'); }); });Node the actual title description is language specific so you could probably tweak the assertion. Mine is specific to my Polish locale. - Modify the .\vscode\launch.json so that when F5 is hit, the web driver io is invoked

{ "version": "0.2.0", "configurations": [ { "type": "node", "request": "launch", "protocol": "inspector", "port": 5859, "name": "WebdriverIO", "runtimeExecutable": "${workspaceRoot}/node_modules/.bin/wdio", "windows": { "runtimeExecutable": "${workspaceRoot}/node_modules/.bin/wdio.cmd" }, "timeout": 1000000, "cwd": "${workspaceRoot}", "console": "integratedTerminal", "args":[ "", "wdio.conf.js", "--spec", "test/test1.js" ] } ] }Note that protocol and ports are explicit - Go to the .\wdio.conf.js file and add

debug: true, execArgv: ['--inspect=127.0.0.1:5859'],flags that set the very same debugging port VS is expecting. You can also replace FF with Chrome in the capabilities section. - Create a breakpoint, hit F5 and see that this time the VS picks the breakpoint correctly

Friday, June 2, 2017

HTTP/2, Keep-Alive and Safari browsers DDoSing your site

A supposedly common scenario where you have a HTTP/2 enabled reverse proxy layer in front of your application servers can have unexpected feature we have lately stumbled upon.

The problem is described by users of Safari browsers (regardless of the actual device, it affects iPhones, iPads as well as the desktop Mac OS) as "the page load time is very long". And indeed, where the page loads in miliseconds on any other browser on any other device, this combination of Apple devices and Safari browsers makes users wait for like 5 to 30 seconds before the page is rendered.

Unfortunately, the issue has a disastrous impact on the actual infrastructure - it is not the page that just loads long. It's much worse. It is the browser that makes as many requests as it is physically able to until it is finally satisfied and renders the page. If a client-side console is consulted in a browser - you see a single request. But from the server's perspective, it's the browser making hundreds of requests per second! During our tests, we have logged Safari waiting about 30 seconds before it rendered the page, making approximately 15000 requests to the server.

A complete disaster, a handful of Safaris could possibly take down a smaller site and even for us it was something noticeable.

We lost like few days tracking the issue, capturing the traffic with various HTTP debuggers and TCP packet analyzers on various devices and trying to narrow the issue to see a pattern behind it.

What it finally turned out to be was the combination of HTTP/2, Reverse proxy layer and specific configuration behind it.

It all starts with the common Connection: keep-alive header that is used to keep the TCP connection so that the browser doesn't open new connections when requesting consecutive resources.

The problem with this header is that since it is a default setting for HTTP/1.1, different servers treat it differently. In particular, the IIS doesn't send it by default while Apache always sends it by default.

And guess what, since Apache sends it, you can have an issue where it is the Apache that sits behing a HTTP/2 enabled proxy.

The scenario is as follows. The browser makes a request to your site and is upgraded to HTTP/2 by your front servers. Then, the actual server is requested and if it is the Apache (or any other server that sends the header) but HTTP/1.1 enabled, the actual server will return the Connection header to the proxy server. And if you are unlucky (as we were), the front server will just proxy the header to the client, together with the rest of the response.

So what, you'd ask. What's wrong with the header?

Well, the problem is, it is considered unnecessary for HTTP/2. The docs says:

The Connection header needs to be set to "keep-alive" for this header to have any meaning. Also, Connection and Keep-Alive are ignored in HTTP/2; connection management is handled by other mechanisms there.

The actual HTTP/2 RFC states otherwise

This means that an intermediary transforming an HTTP/1.x message to HTTP/2 will need to remove any header fields nominated by the Connection header field, along with the Connection header field itself. Such intermediaries SHOULD also remove other connection- specific header fields, such as Keep-Alive, Proxy-Connection, Transfer-Encoding, and Upgrade, even if they are not nominated by the Connection header field.

What could be considered unnecessary and be ignored, for some (hello Apple) is considered illegal. By analyzing TCP packets we have found out that Safaris internally fail with Error: PROTOCOL_ERROR (1) and guess what, they repeat the request over and over.

The issue has finally been narrowed down and it turned out others have identified it too in a similar scenario.

A solution is then to clear the Connection header from the outgoing response so that the browser never gets it. This potentially slows down browsers that can't make use of HTTP/2 connection optimizations but luckily, most of modern browsers support HTTP/2 for some time.

A happy ending and, well - it looks it is a bug at the Apple's side that should be taken care of to make it more compatible with other browsers that just ignore the issue rather than fail so miserably on it.

Monday, April 24, 2017

Using ngrok to expose any local server to the Internet

var httpProxy = require('http-proxy');

httpProxy.createProxyServer({

target : 'http://a.server.in.your.local.network:80',

changeOrigin : true,

autoRewrite : true

})

.listen(3000);

The two options make sure that both host and location headers are correctly rewritten. Please consult the list of other available options of the http-proxy module if you need more specific behavior.

Then just invoke the ngrok as usual

ngrok http 3000navigate to the address returned by ngrok and you will get the response from the server behind the proxy.

Friday, March 10, 2017

Visual Studio 2017 doesn't seem to support Silverlight but Silverlight itself is supported till 2021

This has been a great week as VS2017 has finally got its RTM version. Unfortunately, for some developers news aren't that great.

It seems the new VS drops the support for the Silverlight:

Silverlight projects are not supported in this version of Visual Studio. To maintain Silverlight applications, continue to use Visual Studio 2015.

Great. Note, though, that Silverlight 5 support lasts till 2021.

This effectively means that starting from now, till 2021, people will have to stick with VS2015 for some projects, despite VS2017, VS2019, VS2021 or any other newer version is released.

Nice one, Microsoft!

Friday, February 3, 2017

Calling promises in a sequence

One of the top questions about promises I was asked lately is a question on how to call promises in a sequence so that the next starts only after the previous one completes.

The question is asked mostly by people with C# background where a Task has to be started in an explicit way. In contrast, in Javascript, as soon as you have the promise object, it the execution has already started. Thus, following naive approach doesn’t work:

function getPromise(n) {

return new Promise( (res, rej) => {

setTimeout( () => {

console.log(n);

res(n);

}, 1000 );

});

}

console.log('started');

// in parallel

Promise.all( [1,2,3,4,5].map( n => getPromise(n)) )

.then( result => {

console.log('finished with', result);

});

The above code yields 1,2,3,4,5 as soon as 1 second passes and there are no further delays, although people expect them here. The problem with this approach is that as soon as getPromise is called, the promise code starts. There are then 5 timers in total, started in almost the very same time, the time it takes the map function to loop over the source array.

In order to call promises in a sequence we have to completely rethink the approach. Each next promise should be invoked from within the then handler of the previous promise as then is called only where a promise is fulfilled. A simple structural trick is to use map.reduce in order to loop over the array so that each time we have the access to the previous “value” (the previous promise) and the current value (current number/whatever from the array). The map.reduce needs an “initial value” and it should be a promise so we just feed it with a dummy, resolved promise.

function getPromise(n) {

return new Promise( (res, rej) => {

setTimeout( () => {

console.log(n);

res(n);

}, 1000 );

});

}

console.log('started');

// in a sequence

[1,2,3,4,5].reduce( (prev, cur) => {

return prev.then( result => {

return getPromise(cur);

});

}, Promise.resolve() )

.then( result => {

console.log('finished with', result);

});

A neat trick worth of remembering.

Thursday, January 12, 2017

Node.js 7.x async/await example with express and mssql

Async/await has finally found its home in node 7.x and it’s just great, we’ve been waiting for ages. Not only it works great, with Visual Studio Code you can write and debug async/await like any other code. Just make sure you have runtimeArgs in your launch.json as a temporary fix until it’s not required somewhere in future:

{

// Use IntelliSense to learn about possible Node.js debug attributes.

// Hover to view descriptions of existing attributes.

// For more information, visit: https://go.microsoft.com/fwlink/?linkid=830387

"version": "0.2.0",

"configurations": [

{

"type": "node",

"request": "launch",

"name": "Launch Program",

"program": "${workspaceRoot}/app.js",

"cwd": "${workspaceRoot}",

"runtimeArgs": [

"--harmony"

]

},

{

"type": "node",

"request": "attach",

"name": "Attach to Process",

"port": 5858

}

]

}

Async/await could possibly sound like a ephemeral alien until you realize that a lot of good code has already been written with Promises async/await is a sugar over. In an example below I read the data from a simple Sql Server table using node’s mssql module:

CREATE TABLE [dbo].[Parent]( [ID] [int] IDENTITY(1,1) NOT NULL, [ParentName] [nvarchar](150) NOT NULL, CONSTRAINT [PK_Parent] PRIMARY KEY CLUSTERED ( [ID] ASC ) ) ON [PRIMARY]

I also need a connection string somewhere

// settings.js module.exports.connectionString = 'server=.\\sql2012;database=databasename;user id=user;password=password';

Note that since tedious doesn't support integrated auth at the moment, you are stuck with sql's username/pwd authentication. The mssql on the other hand uses tedious internally.

Here goes an example of the "old-style" code, written with promises var conn = new sql.Connection(settings.connectionString);

conn.connect()

.then( () => {

var request = new sql.Request(conn);

request.query('select * from Parent')

.then( recordset => {

recordset.forEach( r => {

// do something with single record

});

conn.close();

})

.catch( err => {

console.log( err );

});

})

.catch( err => {

console.log(err);

});

(note that you could possibly avoid nesting thens by just returing the request.query promise so that the next, chained then would refer to it.) However, with async/await the same becomes

try {

var conn = new sql.Connection(settings.connectionString);

await conn.connect();

var request = new sql.Request(conn);

var recordset = await request.query('select * from Parent')

recordset.forEach( r => {

// do something with single record

});

conn.close();

}

catch ( err ) {

console.log( err );

}

Pretty impressive, if you ask me. If you don't mind occasional awaits, the code is clean, no .thens, no .catches. Remember that express middlewares can be async too

var app = express();

app.get('/', async (req, res) => {

// code that awaits

});

All this means the callback hell is hopefully gone forever.